Install Aws Cli In Docker Container

Share and deploy container software, publicly or privately

Amazon Elastic Container Registry (ECR) is a fully managed container registry that makes it easy to store, manage, share, and deploy your container images and artifacts anywhere. Amazon ECR eliminates the need to operate your own container repositories or worry about scaling the underlying infrastructure. Amazon ECR hosts your images in a highly available and high-performance architecture, allowing you to reliably deploy images for your container applications. You can share container software privately within your organization or publicly worldwide for anyone to discover and download. For example, developers can search the ECR public gallery for an operating system image that is geo-replicated for high availability and faster downloads. Amazon ECR works with Amazon Elastic Kubernetes Service (EKS), Amazon Elastic Container Service (ECS), and AWS Lambda, simplifying your development to production workflow, and AWS Fargate for one-click deployments. Or you can use ECR with your own containers environment. Integration with AWS Identity and Access Management (IAM) provides resource-level control of each repository. With ECR, there are no upfront fees or commitments. You pay only for the amount of data you store in your repositories and data transferred to the Internet.

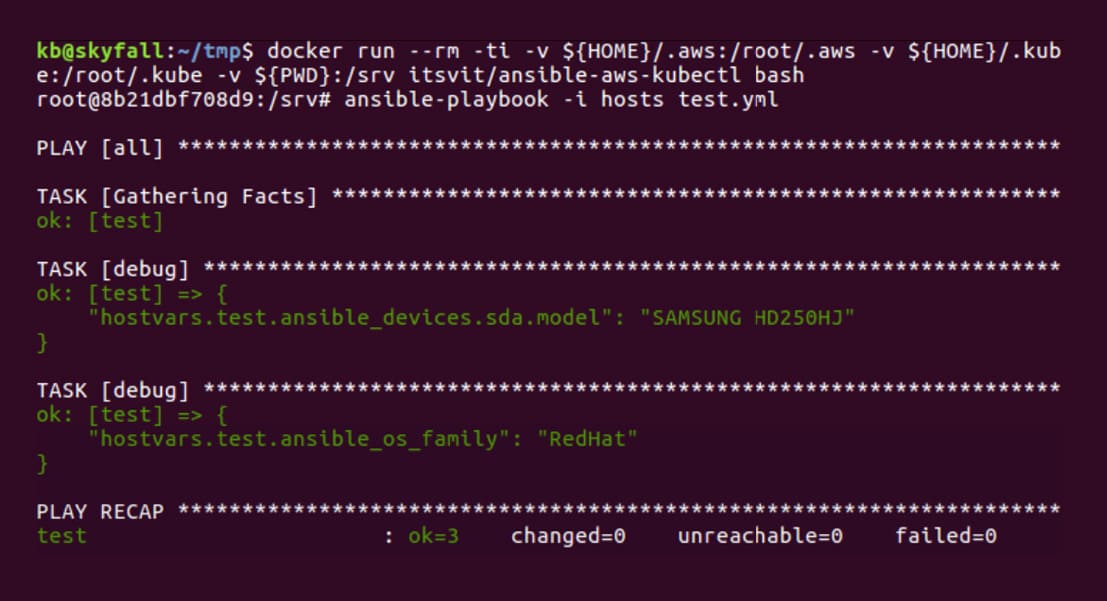

This allows the AWS SDK used by application code to access a local mock container as “AWS metadata API” and retrieve credentials from your own local.aws/credentials config file. Install the Docker Compose CLI on Linux. The Docker Compose CLI adds support for running and managing containers on ECS. Install Prerequisites. Docker 19.03 or later. Preparing the LocalStack container. Start setting up Docker, for it, we download and install it from here. After install it, check the Docker installation with the following command(use Powershell). Docker -version. Once Docker is running, pull the LocalStack image. The image size is almost 500mb and uncompress is around a 1gb. The first step is to install the CLI. Instructions to install the CLI on both Mac and Linux are explained very clearly in the official docs. Go ahead, install the CLI and when you are done, verify the install by running $ ecs-cli -version ecs-cli version 1.18.1 (7e9df84) Next, we'll be working on configuring the CLI so that we can talk to ECS. You must stop the old Rancher server container using the incorrect IP for -advertise-address and start a new Rancher server with the correct IP for -advertise-address. Running Rancher Server Behind an Elastic/Classic Load Balancer (ELB) in AWS. We recommend using an ELB in AWS in front of your Rancher servers. Docker registry: This is an application responsible for managing storage and delivery of Docker container images. It can be private or public. Install Docker CE on CentOS 8 RHEL 8. So far we have covered docker introduction and terminologies. We should be ready to install Docker CE on RHEL 8 / CentOS 8.

Benefits

Reduce your effort with a fully managed registry

Amazon Elastic Container Registry eliminates the need to operate and scale the infrastructure required to power your container registry. There is no software to install and manage or infrastructure to scale. Just push your container images to Amazon ECR and pull the images using any container management tool when you need to deploy.

Securely share and download container images

Amazon Elastic Container Registry transfers your container images over HTTPS and automatically encrypts your images at rest. You can configure policies to manage permissions and control access to your images using AWS Identity and Access Management (IAM) users and roles without having to manage credentials directly on your EC2 instances.

Provide fast and highly available access

Amazon Elastic Container Registry has a highly scalable, redundant, and durable architecture. Your container images are highly available and accessible, allowing you to reliably deploy new containers for your applications. You can reliably distribute public container images as well as related files such as helm charts and policy configurations for use by any developer. ECR automatically replicates container software to multiple AWS Regions to reduce download times and improve availability.

Simplify your deployment workflow

Amazon Elastic Container Registry integrates with Amazon EKS, Amazon ECS, AWS Lambda, and the Docker CLI, allowing you to simplify your development and production workflows. You can easily push your container images to Amazon ECR using the Docker CLI from your development machine, and integrated AWS services can pull them directly for production deployments. Publishing container software is as easy as a single command from CI/CD workflows used in the software developer process.

How it works

'At Pinterest we use Amazon Elastic Container Registry (ECR) for managing our Docker container images. We use ECR’s image scanning feature to help us improve security of our container images. ECR scans images for a broad range of operating system vulnerabilities and lets us build tools to act on the results.”

Cedric Staub, Engineering Manager, Pinterest

At Blackboard, our mission is to advance learning for students, educators and institutions around the globe. In order to best serve clients, we use ECR because it provides a stable and secure container registry for Blackboard to host first and third-party images. ECR provides the high availability and uptime other registries fail to maintain, while providing a fully managed solution that has streamlined our workflows at Blackboard.”

Joel Snook, Director, DevOps Engineering

“Snowflake decided to replicate images to ECR, a fully managed docker container registry, providing a reliable local registry to store images. Additional benefits for the local registry are that it’s not exclusive to Joshua; all platform components required for Snowflake clusters can be cached in the local ECR Registry. For additional security and performance Snowflake uses AWS PrivateLink to keep all network traffic from ECR to the workers nodes within the AWS network. It also resolved rate-limiting issues from pulling images from a public registry with unauthenticated requests, unblocking other cluster nodes from pulling critical images for operation.”

Brian Nutt, Senior Software Engineer, Snowflake

Estimated reading time: 21 minutes

Overview

The Docker Compose CLI enables developers to use native Docker commands to run applications in Amazon EC2 Container Service (ECS) when building cloud-native applications.

The integration between Docker and Amazon ECS allows developers to use the Docker Compose CLI to:

- Set up an AWS context in one Docker command, allowing you to switch from a local context to a cloud context and run applications quickly and easily

- Simplify multi-container application development on Amazon ECS using Compose files

Also see the ECS integration architecture, full list of compose features and Compose examples for ECS integration.

Prerequisites

To deploy Docker containers on ECS, you must meet the following requirements:

Download and install Docker Desktop Stable version 2.3.0.5 or later, or Edge version 2.3.2.0 or later.

Alternatively, install the Docker Compose CLI for Linux.

Ensure you have an AWS account.

Docker not only runs multi-container applications locally, but also enablesdevelopers to seamlessly deploy Docker containers on Amazon ECS using aCompose file with the docker compose up command. The following sectionscontain instructions on how to deploy your Compose application on Amazon ECS.

Run an application on ECS

Requirements

AWS uses a fine-grained permission model, with specific role for each resource type and operation.

To ensure that Docker ECS integration is allowed to manage resources for your Compose application, you have to ensure your AWS credentials grant access to following AWS IAM permissions:

- application-autoscaling:*

- cloudformation:*

- ec2:AuthorizeSecurityGroupIngress

- ec2:CreateSecurityGroup

- ec2:CreateTags

- ec2:DeleteSecurityGroup

- ec2:DescribeRouteTables

- ec2:DescribeSecurityGroups

- ec2:DescribeSubnets

- ec2:DescribeVpcs

- ec2:RevokeSecurityGroupIngress

- ecs:CreateCluster

- ecs:CreateService

- ecs:DeleteCluster

- ecs:DeleteService

- ecs:DeregisterTaskDefinition

- ecs:DescribeClusters

- ecs:DescribeServices

- ecs:DescribeTasks

- ecs:ListAccountSettings

- ecs:ListTasks

- ecs:RegisterTaskDefinition

- ecs:UpdateService

- elasticloadbalancing:*

- iam:AttachRolePolicy

- iam:CreateRole

- iam:DeleteRole

- iam:DetachRolePolicy

- iam:PassRole

- logs:CreateLogGroup

- logs:DeleteLogGroup

- logs:DescribeLogGroups

- logs:FilterLogEvents

- route53:CreateHostedZone

- route53:DeleteHostedZone

- route53:GetHealthCheck

- route53:GetHostedZone

- route53:ListHostedZonesByName

- servicediscovery:*

GPU support, which relies on EC2 instances to run containers with attached GPU devices,require a few additional permissions:

- ec2:DescribeVpcs

- autoscaling:*

- iam:CreateInstanceProfile

- iam:AddRoleToInstanceProfile

- iam:RemoveRoleFromInstanceProfile

- iam:DeleteInstanceProfile

Create AWS context

Run the docker context create ecs myecscontext command to create an Amazon ECS Dockercontext named myecscontext. If you have already installed and configured the AWS CLI,the setup command lets you select an existing AWS profile to connect to Amazon.Otherwise, you can create a new profile by passing anAWS access key ID and a secret access key.Finally, you can configure your ECS context to retrieve AWS credentials by AWS_* environment variables, which is a common way to integrate withthird-party tools and single-sign-on providers.

After you have created an AWS context, you can list your Docker contexts by running the docker context ls command:

Run a Compose application

You can deploy and manage multi-container applications defined in Compose filesto Amazon ECS using the docker compose command. To do this:

Ensure you are using your ECS context. You can do this either by specifyingthe

--context myecscontextflag with your command, or by setting thecurrent context using the commanddocker context use myecscontext.Run

docker compose upanddocker compose downto start and thenstop a full Compose application.By default,

docker compose upuses thecompose.yamlordocker-compose.yamlfile inthe current folder. You can specify the working directory using the --workdir flag orspecify the Compose file directly usingdocker compose --file mycomposefile.yaml up.You can also specify a name for the Compose application using the

--project-nameflag during deployment. If no name is specified, a name will be derived from the working directory.

Docker ECS integration converts the Compose application model into a set of AWS resources, described as a CloudFormation template. The actual mapping is described in technical documentation.You can review the generated template using docker compose convert command, and follow CloudFormation applying this model withinAWS web console when you run docker compose up, in addition to CloudFormation events being displayedin your terminal.

You can view services created for the Compose application on Amazon ECS andtheir state using the

docker compose pscommand.You can view logs from containers that are part of the Compose applicationusing the

docker compose logscommand.

Also see the full list of compose features.

Rolling update

To update your application without interrupting production flow you can simplyuse docker compose up on the updated Compose project.Your ECS services are created with rolling update configuration. As you rundocker compose up with a modified Compose file, the stack will beupdated to reflect changes, and if required, some services will be replaced.This replacement process will follow the rolling-update configuration set byyour services deploy.update_configconfiguration.

AWS ECS uses a percent-based model to define the number of containers to berun or shut down during a rolling update. The Docker Compose CLI computesrolling update configuration according to the parallelism and replicasfields. However, you might prefer to directly configure a rolling updateusing the extension fields x-aws-min_percent and x-aws-max_percent.The former sets the minimum percent of containers to run for service, and thelatter sets the maximum percent of additional containers to start beforeprevious versions are removed.

By default, the ECS rolling update is set to run twice the number ofcontainers for a service (200%), and has the ability to shut down 100%containers during the update.

View application logs

The Docker Compose CLI configures AWS CloudWatch Logs service for yourcontainers.By default you can see logs of your compose application the same way you check logs of local deployments:

A log group is created for the application as docker-compose/<application_name>,and log streams are created for each service and container in your applicationas <application_name>/<service_name>/<container_ID>.

You can fine tune AWS CloudWatch Logs using extension field x-aws-logs_retentionin your Compose file to set the number of retention days for log events. Thedefault behavior is to keep logs forever.

You can also pass awslogsparameters to your container as standardCompose file logging.driver_opts elements. See AWS documentation for details on available log driver options.

Private Docker images

The Docker Compose CLI automatically configures authorization so you can pull private images from the Amazon ECR registry on the same AWS account. To pull private images from another registry, including Docker Hub, you’ll have to create a Username + Password (or a Username + Token) secret on the AWS Secrets Manager service.

For your convenience, the Docker Compose CLI offers the docker secret command, so you can manage secrets created on AWS SMS without having to install the AWS CLI.

First, create a token.json file to define your DockerHub username and access token.

For instructions on how to generate access tokens, see Managing access tokens.

You can then create a secret from this file using docker secret:

Once created, you can use this ARN in you Compose file using using x-aws-pull_credentials custom extension with the Docker image URI for your service.

Note

If you set the Compose file version to 3.8 or later, you can use the same Compose file for local deployment using docker-compose. Custom ECS extensions will be ignored in this case.

Service discovery

Service-to-service communication is implemented transparently by default, so you can deploy your Compose applications with multiple interconnected services without changing the compose file between local and ECS deployment. Individual services can run with distinct constraints (memory, cpu) and replication rules.

Service names

Services are registered automatically by the Docker Compose CLI on AWS Cloud Map during application deployment. They are declared as fully qualified domain names of the form: <service>.<compose_project_name>.local.

Services can retrieve their dependencies using Compose service names (as they do when deploying locally with docker-compose), or optionally use the fully qualified names.

Dependent service startup time and DNS resolution

Services get concurrently scheduled on ECS when a Compose file is deployed. AWS Cloud Map introduces an initial delay for DNS service to be able to resolve your services domain names. Your code needs to support this delay by waiting for dependent services to be ready, or by adding a wait-script as the entrypoint to your Docker image, as documented in Control startup order.Note this need to wait for dependent services in your Compose application also exists when deploying locally with docker-compose, but the delay is typically shorter. Issues might become more visible when deploying to ECS if services do not wait for their dependencies to be available.

Alternatively, you can use the depends_on feature of the Compose file format. By doing this, dependent service will be created first, and application deployment will wait for it to be up and running before starting the creation of the dependent services.

Service isolation

Service isolation is implemented by the Security Groups rules, allowing services sharing a common Compose file “network” to communicate together using their Compose service names.

Volumes

ECS integration supports volume management based on Amazon Elastic File System (Amazon EFS).For a Compose file to declare a volume, ECS integration will define creation of an EFSfile system within the CloudFormation template, with Retain policy so data won’tbe deleted on application shut-down. If the same application (same project name) isdeployed again, the file system will be re-attached to offer the same user experiencedevelopers are used to with docker-compose.

A basic compose service using a volume can be declared like this:

With no specific volume options, the volume still must be declared in the volumessection forthe compose file to be valid (in the above example the empty mydata: entry)If required, the initial file system can be customized using driver-opts:

File systems created by executing docker compose up on AWS can be listed usingdocker volume ls and removed with docker volume rm <filesystemID>.

An existing file system can also be used for users who already have data stored on EFSor want to use a file system created by another Compose stack.

Accessing a volume from a container can introduce POSIX user IDpermission issues, as Docker images can define arbitrary user ID / group ID for theprocess to run inside a container. However, the same uid:gid will have to matchPOSIX permissions on the file system. To work around the possible conflict, you can set the volumeuid and gid to be used when accessing a volume:

Secrets

You can pass secrets to your ECS services using Docker model to bind sensitivedata as files under /run/secrets. If your Compose file declares a secret asfile, such a secret will be created as part of your application deployment onECS. If you use an existing secret as external: true reference in yourCompose file, use the ECS Secrets Manager full ARN as the secret name:

Secrets will be available at runtime for your service as a plain text file /run/secrets/foo.

The AWS Secrets Manager allows you to store sensitive data either as a plaintext (like Docker secret does), or as a hierarchical JSON document. You canuse the latter with Docker Compose CLI by using custom field x-aws-keys todefine which entries in the JSON document to bind as a secret in your servicecontainer.

By doing this, the secret for bar key will be available at runtime for yourservice as a plain text file /run/secrets/foo/bar. You can use the specialvalue * to get all keys bound in your container.

Auto scaling

Scaling service static information (non auto-scaling) can be specified using the normal Compose syntax:

The Compose file model does not define any attributes to declare auto-scaling conditions.Therefore, we rely on x-aws-autoscaling custom extension to define the auto-scaling range, aswell as cpu or memory to define target metric, expressed as resource usage percent.

IAM roles

Your ECS Tasks are executed with a dedicated IAM role, granting accessto AWS Managed policiesAmazonECSTaskExecutionRolePolicyand AmazonEC2ContainerRegistryReadOnly.In addition, if your service uses secrets, IAM Role gets additionalpermissions to read and decrypt secrets from the AWS Secret Manager.

You can grant additional managed policies to your service executionby using x-aws-policies inside a service definition:

You can also write your own IAM Policy Documentto fine tune the IAM role to be applied to your ECS service, and usex-aws-role inside a service definition to pass theyaml-formatted policy document.

Tuning the CloudFormation template

The Docker Compose CLI relies on Amazon CloudFormation to manage the application deployment. To get more control on the created resources, you can use docker compose convert to generate a CloudFormation stack file from your Compose file. This allows you to inspect resources it defines, or customize the template for your needs, and then apply the template to AWS using the AWS CLI, or the AWS web console.

Once you have identified the changes required to your CloudFormation template, you can include overlays in yourCompose file that will be automatically applied on compose up. An overlay is a yaml object that uses the same CloudFormation template data structure as the one generated by ECS integration, but only contains attributes tobe updated or added. It will be merged with the generated template before being applied on the AWS infrastructure.

Adjusting Load Balancer http HealthCheck configuration

While ECS cluster uses the HealthCheck command on container to get service health, Application Load Balancers definetheir own URL-based HealthCheck mechanism so traffic gets routed. As the Compose model does not offer such anabstraction (yet), the default one is applied, which queries your service under / expecting HTTP status code200.

You can tweak this behavior using a cloudformation overlay by following the AWS CloudFormation User Guide forconfiguration reference:

Setting SSL termination by Load Balancer

You can use Application Load Balancer to handle the SSL termination for HTTPS services, so that your code, which ran insidea container, doesn’t have to. This is currently not supported by the ECS integration due to the lack of an equivalent abstraction in the Compose specification. However, you can rely on overlays to enable this feature on generated Listeners configuration:

Using existing AWS network resources

By default, the Docker Compose CLI creates an ECS cluster for your Compose application, a Security Group per network in your Compose file on your AWS account’s default VPC, and a LoadBalancer to route traffic to your services.

With the following basic compose file, the Docker Compose CLI will automatically create these ECS constructs including the load balancer to route traffic to the exposed port 80.

If your AWS account does not have permissions to create such resources, or if you want to manage these yourself, you can use the following custom Compose extensions:

Use

x-aws-clusteras a top-level element in your Compose file to set the IDof an ECS cluster when deploying a Compose application. Otherwise, acluster will be created for the Compose project.Use

x-aws-vpcas a top-level element in your Compose file to set the ARNof a VPC when deploying a Compose application.Use

x-aws-loadbalanceras a top-level element in your Compose file to setthe ARN of an existing LoadBalancer.

The latter can be used for those who want to customize application exposure, typically touse an existing domain name for your application:

- Use the AWS web console or CLI to get your VPC and Subnets IDs. You can retrieve the default VPC ID and attached subnets using this AWS CLI commands:

- Use the AWS CLI to create your load balancer. The AWS Web Console can also be used but will require adding at least one listener, which we don’t need here.

To assign your application an existing domain name, you can configure your DNS with aCNAME entry pointing to just-created loadbalancer’s

DNSNamereported as you created the loadbalancer.Use Loadbalancer ARN to set

x-aws-loadbalancerin your compose file, and deploy your application usingdocker compose upcommand.

Please note Docker ECS integration won’t be aware of this domain name, so docker compose ps command will report URLs with loadbalancer DNSName, not your own domain.

You also can use external: true inside a network definition in your Compose file forDocker Compose CLI to not create a Security Group, and set name with theID of an existing SecurityGroup you want to use for network connectivity betweenservices:

Local simulation

When you deploy your application on ECS, you may also rely on the additional AWS services.In such cases, your code must embed the AWS SDK and retrieve API credentials at runtime.AWS offers a credentials discovery mechanism which is fully implemented by the SDK, and relieson accessing a metadata service on a fixed IP address.

Once you adopt this approach, running your application locally for testing or debug purposescan be difficult. Therefore, we have introduced an option on context creation to set theecs-local context to maintain application portability between local workstation and theAWS cloud provider.

When you select a local simulation context, running the docker compose up command doesn’tdeploy your application on ECS. Therefore, you must run it locally, automatically adjusting your Composeapplication so it includes the ECS local endpoints.This allows the AWS SDK used by application code toaccess a local mock container as “AWS metadata API” and retrieve credentials from your ownlocal .aws/credentials config file.

Install the Docker Compose CLI on Linux

The Docker Compose CLI adds support for running and managing containers on ECS.

Install Prerequisites

Install script

You can install the new CLI using the install script:

FAQ

What does the error this tool requires the 'new ARN resource ID format' mean?

This error message means that your account requires the new ARN resource ID format for ECS. To learn more, see Migrating your Amazon ECS deployment to the new ARN and resource ID format.

Feedback

Thank you for trying out the Docker Compose CLI. Your feedback is very important to us. Let us know your feedback by creating an issue in the Compose CLI GitHub repository.

Install Aws Cli In Docker Containers

Docker, AWS, ECS, Integration, context, Compose, cli, deploy, containers, cloud